Description of Neural Processing Unit (NPU)

1、 What is NPU?

This is just the beginning of a surge in demand for neural networks and machine learning processing. Traditional CPU/GPU can perform similar tasks, but NPUs optimized specifically for neural networks can perform better than CPU/GPU. Gradually, similar neural network tasks will be completed by specialized NPU units.

NPU (Neural Processing Unit) is a specialized processor used for network application data packets, adopting a "data-driven parallel computing" architecture, particularly adept at processing massive multimedia data such as videos and images.

NPU is also an integrated circuit, but unlike dedicated integrated circuits (ASICs) with a single function, network processing is more complex and flexible. Usually, we can use software or hardware for special programming based on the characteristics of network computing to achieve the special purposes of the network.

Nuclear power plant

The highlight of NPU is its ability to run multiple parallel threads - NPU has been upgraded to another level through some special hardware level optimizations, such as providing accessible caching systems for truly different processing cores. These high-capacity kernels are simpler than typical "regular" processors because they do not need to perform multiple types of tasks. This set of "optimizations" makes NPUs more efficient, which is why so much research and development is invested in ASICs.

One of the advantages of NPUs is that they spend most of their time on low precision algorithms, new data flow architectures, or in memory computing power. Unlike GPUs, they are more concerned with throughput rather than latency.

II NPU processor module

NPU is specifically designed for IoT AI to accelerate neural network operations and solve the problem of low efficiency in traditional chip neural network operations. NPU processor includes modules for multiplication and addition, activation function, 2D data operation, decompression, etc.

The multiplication and addition module is used to calculate matrix multiplication and addition, convolution, dot product and other functions. There are 64 MACs in the NPU and 32 MACs in the SNPU.

The activation function module is used to realize the activation function in the neural network by using the highest 12th order parameter fitting. There are 6 MAC in the NPU and 3 MAC in the SNP

The 2D data operation module is used to implement operations on a plane, such as sampling and copying plane data. There is one MAC and one SNPU inside the NPU.

The decompression module is used to decompress the weighted data. In order to solve the problem of small memory bandwidth of IoT devices, the weights in the neural network can be compressed in the NPU compiler, which can achieve 6-10 times the compression effect, almost without affecting the accuracy.

III NPU: The Core Carrier of Mobile AI

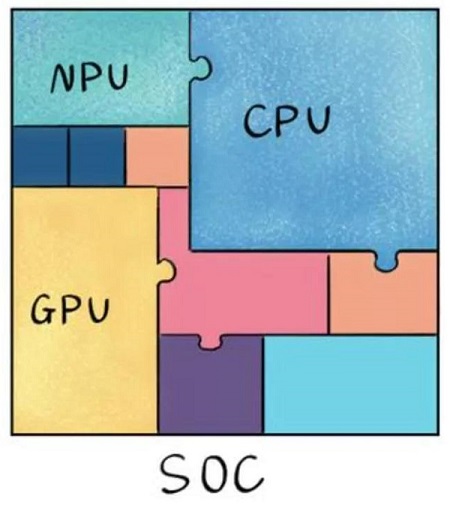

As is well known, the normal operation of mobile phones relies on SoC chips, which are only the size of nail caps but have all the "internal organs". Its integrated modules work together and support the implementation of mobile phone functions. The CPU is responsible for smooth switching of mobile applications, the GPU supports fast loading of game screens, and the NPU is specifically responsible for AI computing and implementation of AI applications.

We still need to start with Huawei, which was the first company to use NPU (Neural Network Processing Unit) on mobile phones and also the first company to integrate NPU into mobile CPU.

In 2017, Huawei launched its own architecture NPU. Compared to traditional scalar and vector computing modes, Huawei's independently developed architecture NPU uses 3D Cube to accelerate matrix computation. Therefore, the amount of data calculated per unit time is larger, and the AI computing power per unit power consumption is stronger, achieving an order of magnitude improvement compared to traditional CPUs and GPUs, and achieving better energy efficiency.

Huawei is using external methods for the first time to use Cambrian NPUs for Mate10. One year later, Huawei integrated the Cambrian NPU IP into the 980, and another year later, Huawei abandoned the Cambrian and used its own Da Vinci NPU on the 990.

The NPU in Galaxy is also built into the mobile processor to leverage advanced neural networks and provide a higher level of visual intelligence for the Galaxy S20/S20+/S20 Ultra and Z Flip. NPU provides power for the scene optimizer, enhances the ability to recognize content in photos, and prompts the camera to adjust it to the ideal setting for the subject being photographed. It is now also more accurate than previous Galaxy models. It also enables the front camera to blur the background of the selfie and produce defocus effects. Not only that, NPU also helps AI Bixby Vision on the device.

IV NPU and GPU

Although GPU has advantages in parallel computing power, it does not work alone and requires collaborative processing from the CPU. construction

The construction of neural network models and data streams is still ongoing on the CPU. There are also issues with high power consumption and large size. The higher the performance, the larger the GPU, the higher the power consumption, and the more expensive the price, which is not available for some small and mobile devices. Therefore, a specialized chip NPU with small size, low power consumption, high computational performance, and high computational efficiency has emerged.

NPU works by simulating human neurons and synapses at the circuit level and directly processing large-scale neurons and synapses through deep learning instruction sets, with one instruction completing the processing of a set of neurons. Compared with CPU and GPU, NPU improves operational efficiency by integrating storage and computation through synaptic weights.

CPU and GPU processors require thousands of instructions to complete neuronal processing. NPU only requires one or a few instructions to complete, so it has significant advantages in terms of processing efficiency in deep learning. The experimental results show that under the same power consumption, the performance of NPU is 118 times that of GPU.

5、 Characteristics of different processing units

CPU -70% of the transistors are used to build the cache and a part of the control unit. There are few computing units suitable for logical control operations.

GPU - Transistors are mainly used to build computing units with low computational complexity and are suitable for large-scale parallel computing. Mainly used for big data, backend servers, and image processing.

NPU - A neuron in the analog circuit layer that integrates storage and computation through synaptic weights. One instruction completes the processing of a set of neurons, improving operational efficiency. Mainly used in the field of communication, big data, and image processing.

FPGA - Programmable logic, high computational efficiency, closer to the underlying IO. Logic can be edited through redundant transistors and links. It is essentially instruction free, does not require shared memory, and is more efficient than CPU and GPU. Mainly used for smartphones, portable mobile devices, and cars.

6、 Practical Application of NPU

NPU performs AI scene recognition during photography and uses NPU calculations to modify the image.

NPU judges the details of the light source and dark light, synthesizing a super night scene.

Implement voice assisted operations through NPU.

NPU with GPU Turbo pre determines the next frame to achieve early rendering, thereby improving the smoothness of the game.

NPU pre determines touch to improve following and sensitivity.

NPU uses Link Turbo to determine the difference in network speed requirements between the front-end and back-end.

NPU intelligently adjusts the resolution by judging the game's rendering load.

By reducing the computational load of artificial intelligence during the gaming process, it saves power for NPUs.

NPU implements dynamic scheduling of CPU and GPU.

NPU assists in big data advertising push.

Implement AI intelligent word association function for input method through NPU.

VII. Description of various processing units

APU: accelerated processing unit, a product of AMD used to accelerate image processing chips.

BPU: Brain processing unit, Horizon's leading embedded processor architecture.

CPU: Central processing unit, the mainstream product of PC core.

DPU: Data stream processing unit, an artificial intelligence architecture proposed by wave computing.

FPU: Floating point processing unit, a floating point module in a general-purpose processor.

GPU: Graphics processing unit with multi-threaded SIMD architecture for graphics processing.

HPU: Holographic processing unit, a holographic computing chip, and devices from Microsoft

IPU: Intelligent Processing Unit, Deep Mind's investment in Graphcore artificial intelligence processor products.

MPU/MCU: microprocessor/microcontroller unit, usually used for low computing applications of RISC computer architecture products, such as ARM-M series processors.

NPU: Neural Network Processing Unit, a general term for a new type of processor based on neural network algorithm and acceleration, such as the computer series of the Chinese Academy of Sciences/Cambrian Computing Institute.

RPU: Radio processing unit radio processor, is a collection of WiFi/Bluetooth/FM/processors from Imaging Technologies, serving as a single processor.

TPU: Tensor processing unit, a specialized processor used to accelerate Google artificial intelligence algorithms. The current generation TPU for inference and the second generation TPU for training.

VPU: Vector processing unit, a specialized chip launched by Movidius, a company acquired by Intel, for accelerating image processing and artificial intelligence.

WPU: wearable processing. Yingda System has launched wearable system on a chip products, including GPU/MIPS CPU and other IPs.

XPU: The FPGA intelligent cloud acceleration announced by Baidu and Celine on Hotchips 2017, which includes 256 cores.

ZPU: Zylin processor, a 32-bit open-source processor

- Prev:What is eFPGA?

- Next:What is a server CPU?

-

Whatsapp